Taming Streaming Data with Lenses by Landoop

During my tenure as a Product Manager at Splunk, I had the chance to meet with several Fortune 500 customers who wanted to create a central data transfer backbone. Their goal was to be able to swap or add new destinations for their data, like a warehouse, an application consumer, or a solution like Splunk. Such a backbone would allow IT or other stakeholders to mix and match different technologies without any changes in the most dreaded part of a pipeline, the ingestion layer. More often than not, that backbone is Apache Kafka and its usage has been championed by several high profile tech companies, like LinkedIn -the creators of the project- or Netflix.

As with any Open Source Software (OSS) project, the out of the box version of Apache Kafka, or even its commercial distribution, doesn’t check all the boxes. OSS requires human investment to become useful and user productivity is not its greatest strength. We can safely say that most modern enterprises have chosen to standardize their stack on OSS. Several successful companies like Cloudera, Elastic, RedHat, Mesosphere, DataStax or Docker pick up the task of making OSS easier to use & manage. At the moment, this is the core value proposition for almost any Software Infrastructure provider in the world. OSS allowed developers to innovate without permission from the IT and Procurement departments; as a result, it became the driver for the adoption of new technologies.

Antonis and Christina founded Landoop in 2016 and embarked on a journey to help enterprises adopt and leverage streaming data. Using, deploying, managing and building applications for a streaming platform remains a tedious and labor-intensive task. The more enterprises they engaged with, the better they realized they had to build most of the missing parts. The technology at the core of this transformation was the popular OSS project Apache Kafka.

And build they did. Stream Reactor standardizing ETL over Kafka, the all-inclusive Fast Data Dev container for developers, Kafka Topics UI allowing developers to view their data in motion and Schema Registry UI bringing sanity to admins; many of the most popular tools in the Apache Kafka community today are made by Landoop.

The team proceeded to engage with larger customers, in the likes of SAS or British Gas. They quickly found out that the main hindrance to the adoption of the technology is the shortage of highly-trained operators and developers in these new concepts. That might be less the case for heavily-funded tech startups, but it becomes a serious bottleneck for large corporations across the world. Telcos in Asia, banks in London, agtech sensor networks in the Midwest or utility companies in Italy cannot afford to hire and train all the people needed to build a streaming data platform and its applications. Apache Kafka had to be humanized and its management to be automated.

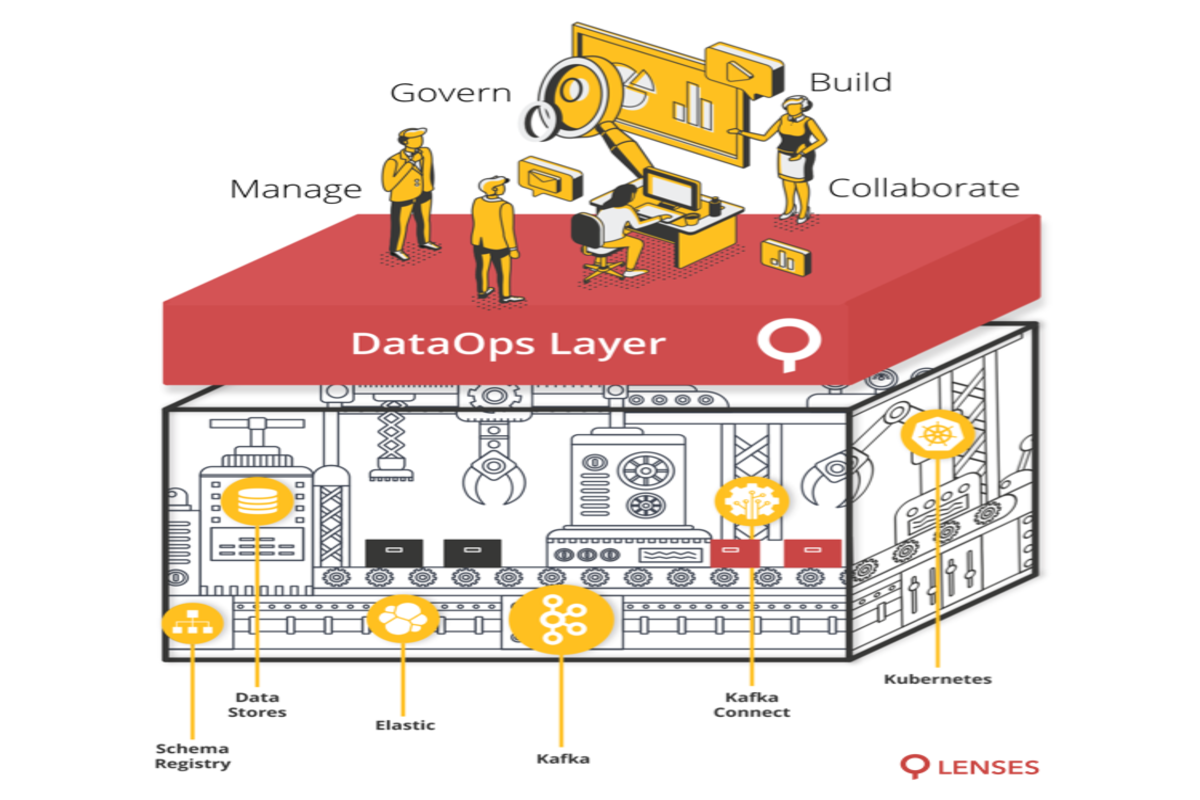

Enter Lenses for Apache Kafka, by Landoop. When I first heard about the company, my eyes rolled. Still, nothing could prepare me for the demo I saw a few days later when Antonis, Christina, Andrew, and Stefan, who joined the company a bit later, walked me through what was an early version of Lenses. Using Lenses, any developer or even business analyst could use Kafka, without having to learn all the primitives, idiosyncrasies and moving parts. Landoop has brought SQL semantics to the streaming world, allowing a user to re-purpose her existing skills to fetch data and then query, analyze, transform and eventually send them to a destination of choice. Lenses does all the heavy lifting for you. It can scale out the execution of queries via Kubernetes or Kafka Connect, allow operators to fine-tune permissions and stay compliant, or connect to over 25 different sources. Landoop has created a unique full-blown offering that helps enterprises to adopt and leverage state-of-the-art streaming technologies without the hassle.

Lenses is this kind of product that brings several personas into the same room. Developers can debug their stream and build out functionality on top of Lenses, thanks to API integrations. Ops can monitor and scale out the cluster. Analysts and business owners can tap the stream for true real-time intelligence. We believe that Lenses in one of the pivotal products that enterprises are standardizing upon.

The scalability, manageability, and cost-effectiveness of Lenses for Apache Kafka is not only timely but critical, at a time when companies are rapidly building out new infrastructure and applications leveraging streaming technologies. We are excited about investing in a leader in this radical shift. Most importantly, though, we believe that the team has what it takes to build a product and a company that can define their space.

If you are an engineer with a deep interest in DevOps, CI, containers, orchestration, Scala and Akka, make sure to check out the open positions.